Recently, I bought Jonathan Cummings’ book, The Rating Game. It made me wonder how the author sees the role and importance of various leading golf magazines’ – Golf Digest, Golf Magazine, and Golfweek -golf club lists.

To what extent can leading golf magazines’ lists influence golfers’ decisions in 2022?

Lists of most any kind influence people.

Ranked lists are everywhere because we are forever looking for shortcuts. Lists are a quick way to get insight into what is best without having to do the research, from the US News & World Report college rankings to those Michelin stars for restaurants. Nowhere are rankings more popular than in sports including golf.

There are a number of ways golf course lists influence golfers’ decisions. First off, lists drive advertising. Golf apparel and equipment companies, as well as golf travel destinations all know that golf “best of” lists are of interest to a wide cross-section of golfers.

Magazine sales of “best of” issues regularly outpace sales of regular issues. I don’t know if anyone has ever correlated golf sales with “best of” issues but it would be an interesting exercise. Sounds like a project for the National Golf Foundation!

Best of lists can also drive prices which may or may not influence a golfer’s decision. It’s not hard to imagine that a club may increase greens fees and/or initiation and dues costs based on a high ranking. Pro shop prices for logoed apparel at highly ranked courses may also command higher prices.

High costs associated with highly ranked courses may drive away more budget-minded golfers. Conversely, a cost-is-no-object-minded golfer may be attracted to the pricier venues because of the implied prestige and potential exclusivity.

This is particularly true with named resorts. In the US a couple wanting to play Pebble Beach may not be able to experience those iconic links without a $5000 price tag (2 nights lodging, golf, meals).

In the UK, $500 range greens fees at the Royal County Downs, Muirfield, and Trump Turnberry, all list toppers, are becoming the norm, and almost certainly a factor in a golfer’s decision when travel planning, especially to highly ranked resorts/courses.

How would you modify the ranking methods of leading golf magazines?

The Rating Game’s Chapter 7 covers this very topic. If you consider the “Big Three” US ranking panels – Golf Digest, Golf Magazine and Golfweek, each publishes various lists (top 100, best in state, best new, etc).

What all lists generated by these publications have in common is that they are each rank-ordered lists – course # 1 better than #2 which is better than #3, etc.

In arriving at these rankings, each of the three magazines uses an intermediate step – they ask panelists (evaluators) to go out and evaluate a candidate course using a numeric grading system.

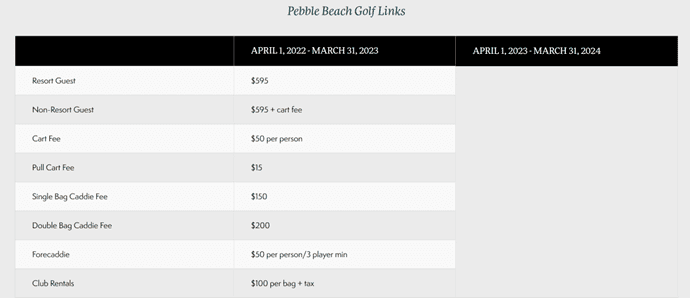

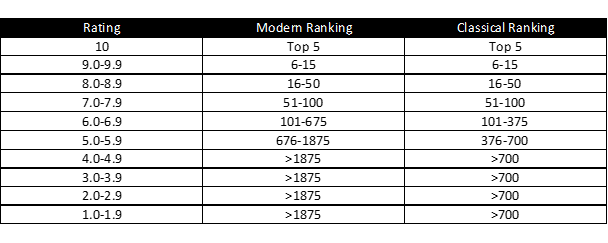

High-numbered grades reflect top courses and low grades lower-ranked courses. In the case of Golfweek, they use a 90-point scale (1.0 to 10.0 in tenths.) Golf Magazine has a similar –10 to 100-point grading system and Golf Digests tasks their raters to provide 1 to 10 scores on design categories, which are combined by the management staff to arrive at total ranking scores.

Each magazine’s numeric scores are related to a suggested ranking (each in a slightly different way.) Here is an example of Golfweek’s score/ranking relationship.

Finally, each magazine averages all evaluator’s numeric scores for each golf course and rank-orders those averages from best to worst to arrive at the published lists.

Each system requires at least one intermediate step (two in the case of Golf Digest) in arriving at their ranked lists.

Here lies the problem

And here lies the problem. Assigning a numeric grading scale to measure the character of a golf course is by nature a subjective exercise.

In an absolutely perfect world where all golf course evaluators agree on a rating, we would be OK using a numeric system. But, obviously, everybody agreeing on nearly anything is unlikely.

Asking 5 evaluators to assign a 1-10 grade to The Old Course at St Andrews we would be far more likely to get five grades of say, 10, 9, 7, 9, 10, then we would 9, 9, 9, 9, 9.

Both sets of numbers average to 9 but one is consistent across raters (no spread in data) and the other is inconsistent across raters (spread in data).

With data spread comes uncertainty or data error. Common errors include sampling error, input omissions, input errors, and bias. All act to increase data spread/uncertainty and corrupt final averages, which in turn corrupts the reported rankings.

An alternate method

There’s an alternate method that makes more sense – eliminate the intermediate step. Rather than assign numbers to golf courses, ask each of the evaluators to simply rank the courses they have seen from best to worst. Then combine the individual lists laterally to generate a single overall ranked list.

There are several methods to do this. One is to employ a pair-wise head-to-head comparison of every course against every other course. Count how many voters like course A over B; how many like course A over C; B over C, etc.

The concluding step is to just count the wins, then rank-order the courses by their total number of wins (In reality it’s slightly more complicated as you also have to account for ties).

Asking voters to rank order their choices is not uncommon. In the recent US state senate primary for the state of Alaska, Sarah Palin was just “defeated” using just such a system (She was rank-ordered out of the top 4 who competed in a final run-off election.)

Again, the details of this proposed solution are explained in Chapter 7 of The Rating Game.

What has a bigger influence on customer behavior: review sites (e.g. Leadingcourses.com) vs. golf magazines’ lists?

I think the magazine lists and review sites are targeting different audiences.

For golf magazines, publishing top 100 lists, especially global ones, are done more for journalistic reasons than to influence customer behavior. It gives a magazine a certain amount of prestige, credibility, and authority to publish tops lists, which in turn drives advertising and sales of issues.

People read and enjoy these lists because it makes them dream of seeing all the great courses. The reality is that only a tiny fraction of the readers will ever see even a modest number of those world top 100s.

Regional top 100 lists probably have more chance of influencing reader/customer behavior. Newly ranked regional resort courses and daily fees will almost certainly increase their draw for regional customers.

Likewise, regional private courses looking for additional members would likely become more attractive with a top ranking.

The review sites are a little different.

The review sites are a little different. Some run ranked lists, and some do not. Aussie, Darius Oliver, has long run his Planet Golf lists. Darius has an ardent but smaller following and his lists always have a few eye-openers.

Over the years Golfclubatlas.com has irregularly run top 100 lists, with most of the exposure kept internally to the 1500 discussion site participants. I’m not very familiar with Leadingcourses.com but it seems similar to top100golfcourses.com – both generate a user input set of lists.

In the case of Top100golfcourses, director David Davis has put together a rather stellar collection of international reviewers, who collectively have canvased the world golf courses.

I don’t know the full number, but the Davis site has reviews for an impressive 7000 or so of the world’s golf courses with rankings for many countries and/or regions. It’s not just the gold, but platinum standard for online regional rankings and reviews!

I would not think of traveling for golf to a place I haven’t been without first checking out what Davis’s reviewers say. Their regional rankings, besides the individual reviews, are extremely helpful for travel planning.

I believe the review sites target tour operators, travel planners, and traveling golfers, which impacts their business behavior. People who just love to pour over top golf lists would probably be more attracted to the magazine lists than those posted on review sites.

Which golf magazine’s list is the most powerful/influential? (That can really contribute to sales & marketing)

I suspect that costs to advertise in a golf magazine are related to the size of that magazine’s circulation (either hard copy or online). Big circulations command higher advertising fees.

Using that metric alone then I believe that the lists by Golf Digest, particularly now that they are publishing various world lists, could be termed the most powerful and influential as the magazine is read on a global scale.

Other major magazine lists probably “move the needle” too, drawing associated sales and marketing but to a lesser extent.

Now if we get into a regional discussion, that’s another story. There’s a long-running publication out of the Washington DC area called Golf Styles. Golf Styles concentrates on all things golf in the mid-Atlantic states (Virginia, Maryland, Delaware, the Carolinas and New Jersey.)

In the early 2000s, when golf construction in the US was booming, Golf Styles would regularly review a new resort/daily fee/private course that opened in the area. They would also run a top 50 courses in the mid-Atlantic list.

If a new course was reviewed and made the list, it was almost a certainty that the course would begin advertising in Golf Styles. So, on a regional scale, here’s an example of how lists can drive the advertising side of golf.

Canada & Australia – golf magazines

Scores in Canada and Australian Golf down under both run top in country lists. While, from a geographic scale, large countries, from a golfing standpoint both Canada and Australia are more regional in nature due to the relatively modest number of courses within their borders.

Like the DC example, if a new course were to make either of these two lists, the “regional” advertisers will likely come flocking. The same goes for the models in South Africa, New Zealand, Japan, and the UK to name a few.

I know less of the various European regional magazine (with their own regional lists) but I suspect similarities – where regional country lists influence and drive industry advertising and consequently, golf sales and marketing within those regions.

How would you rank these rating factors based on importance? Design Variety, Shot Values, Conditioning, Memorability, Aesthetics & Ambiance. Explain it!

These are all worthwhile components of golf course architecture (as are others) but to what degree? Is conditioning more important than shot values, and variety more than memorability?

I could give you my opinion on the relative importance of each, but my opinion would be no better or worse than yours. The answer is – there isn’t a “right” answer.

This is one reason I’m not a fan of a category-based system of determining golf course rankings.

For a category to be useful it must first pass the two tests – it must be meaningful and measurable. If I asked an evaluator when he/she arrived at a candidate course to look up at the sky and give cloudiness a 1 to 10 rating – 1 for clear skies, 5 for half cloud/half clear and 10 for completely overcast.

I’m confident that an evaluator could assign a reliable number to this cloudiness factor. Moreover, I’m pretty confident I would get close to the same number even using different evaluators.

But obviously, what use is knowing cloud cover if you want to determine the quality of a golf course design? This “category” is measurable but meaningless.

Conversely, if I ask my evaluator to judge the degree of the character of a golf course (most would agree an important and common component of highly ranked layouts) using a 1 to 10 scale (1 low character, 10 high character) I’d probably get a pretty wide range of numbers across multiple evaluators.

Here is an example of a meaningful category that is tough to assign a number to.

Useful categories must score highly on any meaningful AND measurable scale AND be consistent across evaluators.

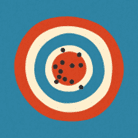

Using the classic target graphic below to represent a category score, what we want is a tight collection of scores, the tighter the better. Why? Because what isn’t “tight” acts as an error and corrupts our results…..for that category.

But it gets worse. That category must be not only tightly bunched around a single score but also highly correlated (bunched or closely related) to the overall score outcome you want.

That means there are not only one but two opportunities for numeric error to creep into our ratings – one from the measured rating category itself, the second from the degree that category is related to the final outcome – the “overall” rating.

Try convincing a mathematician that you can confidently distinguish between close scores or near ties using just such a category-based system.

A much better system would be one that avoids using categories completely to determine top golf rankings.